HCL cluster

Contents

General Information

The hcl cluster is heterogeneous in computing hardware & network ability.

Nodes are from Dell, IBM, and HP, with Celeron, Pentium 4, Xeon, and AMD processors ranging in speeds from 1.8 to 3.6Ghz. Accordingly architectures and parameters such as Front Side Bus, Cache, and Main Memory all vary.

Operating System used is Debian “squeeze” with Linux kernel 2.6.32.

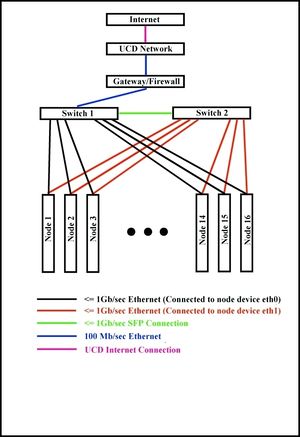

The network hardware consists of two Cisco 24+4 port Gigabit switches. Each node has two Gigabit ethernet ports - each eth0 is connected to the first switch, and each eth1 is connected to the second switch. The switches are also connected to each other. The bandwidth of each port can be configured to meet any value between 8Kb/s and 1Gb/s. This allows testing on a very large number of network topologies, As the bandwidth on the link connecting the two switches can also be configured, the cluster can actually act as two separate clusters connected via one link.

The diagram below shows a schematic of the cluster.

Detailed Cluster Specification

A table of hardware configuration is available here: Cluster Specification

Cluster Administration

Useful Tools

root on heterogeneous.ucd.ie has a number of Expect scripts to automate administration on the cluster (in /root/scripts). root_ssh will automatically log into a host, provide the root password and either return a shell to the user or execute a command that is passed as a second argument. Command syntax is as follows:

# root_ssh

usage: root_ssh [user@]<host> [command]

Example usage, to login and execute a command on each node in the cluster (note the file /etc/dsh/machines.list contains the hostnames of all compute nodes of the cluster):

# for i in `cat /etc/dsh/machines.list`; do root_ssh $i ps ax \| grep pbs; done

The above is sequential. To run parallel jobs, for example: apt-get update && apt-get -y upgrade, try the following trick with screen:

# for i in `cat /etc/dsh/machines.list`; do screen -L -d -m root_ssh $i apt-get update \&\& apt-get -y upgrade'; done

You can check the screenlog.* files for errors and delete them when you are happy. Sometimes all logs are sent to screenlog.0, not sure why.

Software packages available on HCL Cluster 2.0

Wit a fresh installation of operating systems on HCL Cluster the follow list of packages are avalible:

- autoconf

- automake

- fftw2

- git

- gfortran

- gnuplot

- libtool

- netperf

- octave3.2

- qhull

- subversion

- valgrind

- gsl-dev

- vim

- python

- mc

- openmpi-bin

- openmpi-dev

- evince

- libboost-graph-dev

- libboost-serialization-dev

- r-cran-strucchange

- graphviz

- doxygen

new hcl node install & configuration log

new heterogeneous.ucd.ie install log

Access and Security

All access and security for the cluster is handled by the gateway machine (heterogeneous.ucd.ie). This machine is not considered a compute node and should not be used as such. The only new incoming connections allowed are ssh, other incoming packets such as http that are responding to requests from inside the cluster (established or related) are also allowed. Incoming ssh packets are only accepted if they are originating from designated IP addresses. These IP's must be registered ucd IP's. csserver.ucd.ie is allowed, as is hclgate.ucd.ie, on which all users have accounts. Thus to gain access to the cluster you can ssh from csserver, hclgate or other allowed machines to heterogeneous. From there you can ssh to any of the nodes (hcl01-hcl16).

Access from outside the UCD network is only allowed once you have gained entry to a server that allows outside connections (such as csserver.ucd.ie)

Some networking issues on HCL cluster (unsolved)

"/sbin/route" should give:

Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 239.2.11.72 * 255.255.255.255 UH 0 0 0 eth0 heterogeneous.u * 255.255.255.255 UH 0 0 0 eth0 192.168.21.0 * 255.255.255.0 U 0 0 0 eth1 192.168.20.0 * 255.255.255.0 U 0 0 0 eth0 192.168.20.0 * 255.255.254.0 U 0 0 0 eth0 192.168.20.0 * 255.255.254.0 U 0 0 0 eth1 default heterogeneous.u 0.0.0.0 UG 0 0 0 eth0

For reasons unclear, sometimes many machines miss the entry:

192.168.21.0 * 255.255.255.0 U 0 0 0 eth1

For Open MPI, this leads to inability to do a system sockets "connect" call to any 192.*.21.* address (hangup). For this case, you can

- switch off eth1 (see also [1] ):

mpirun --mca btl_tcp_if_exclude lo,eth1 ...

or

- you can restore the above table on all nodes by running "sh /etc/network/if-up.d/00routes" as root

It is not yet clear why without this entry the connection to the "21" addresses can't be connected. We expect that in this case following rule should be matched (because of the mask):

192.168.20.0 * 255.255.254.0 U 0 0 0 eth0

The packets leave over the eth0 network interface then and should go over switch1 to switch2 and eth1 interface of the corresponding node

- If one attempts a ping from one node A, via its eth0 interface, to the address of another node's (B) eth1 interface, the following is observed:

- outgoing ping packets appear only on the eth0 interface of the first node A.

- incoming ping packets appear only on eth1 interface of the second node B.

- outgoing ping response packets appear on the eth0 interface of the second node B, never on the eth1 interface despite pinging the eth1 address specifically.

What explains this? With the routing tables as they are above, or in the damaged case, the ping may arrive to the correct interface, but the response from B is routed to A-eth0 via B-eth0. Further, after a number of ping packets have been sent in sequence (50 to 100), pings from A, though the -i eth0 switch is specified, begin to appear on both A-eth0 and A-eth1. This behaviour is unexpected, but does not effect the return path of the ping response packet.

In order to get a symmetric behaviour, where a packet leaves A-eth0, travels via the switch bridge to B-eth1 and returns back from B-eth1 to A-eth0, one must ensure the routing table of B contains no eth0 entries.

Paging and the OOM-Killer

Due to the nature of experiments run on the cluster, we often induce heavy paging and complete exhaustion of available memory on certain nodes. Linux has a pair of strategies to deal with heavy memory use. First, is overcommitting. This is where a process is allowed allocate or fork even when there is no more memory available. You can seem some interesting numbers here:[2]. The assumption is that processes may not use all memory that they allocate and failing on allocation is worse than failing at a later date when the memory use is actually required. More processes may be supported by allowing them to allocate memory (provided they do not use it all). The second part of the strategy is the Out-of-Memory killer (OOM Killer). When memory has been exhausted and a process tries to use some 'overcommitted' part of memory, the OOM killer is invoked. It's job is to rank all processes in terms of their memory use, priority, privilege and some other parameters, and then select a process to kill based on the ranks.

The argument for using overcommital+OOM Killer is that rather than failing to allocate memory for some random unlucky process, which as a result would probably terminate, the kernel can instead allow the unlucky process to continue executing and then make a some-what-informed decision on which process to kill. Unfortunately, the behaviour of the OOM-killer sometimes causes problems which grind the machine to a complete halt, particularly when it decides to kill system processes. There is a good discussion on the OOM-killer here: [3]

If you notice a machine has gone down (particularly hcl11) on the cluster, it is likely to be as a result of this. We can consider disabling overcommit which will probably result in the termination of the types of experiments that cause the heavy paging, rather than some other system process. This would probably be most desirable but it is up for discussion.