Difference between revisions of "HCL cluster"

(→HCL Cluster 2.0) |

|||

| (75 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | == General Information == | |

| + | [[Image:Cluster.jpg|right|thumbnail||HCL Cluster]] | ||

| + | [[Image:network.jpg|right|thumbnail||Layout of the Cluster]] | ||

| + | The hcl cluster is heterogeneous in computing hardware & network ability. | ||

| − | + | Nodes are from Dell, IBM, and HP, with Celeron, Pentium 4, Xeon, and AMD processors ranging in speeds from 1.8 to 3.6Ghz. Accordingly architectures and parameters such as Front Side Bus, Cache, and Main Memory all vary. | |

| − | + | Operating System used is Debian “squeeze” with Linux kernel 2.6.32. | |

| + | The network hardware consists of two Cisco 24+4 port Gigabit switches. Each node has two Gigabit ethernet ports - each eth0 is connected to the first switch, and each eth1 is connected to the second switch. The switches are also connected to each other. The bandwidth of each port can be configured to meet any value between 8Kb/s and 1Gb/s. This allows testing on a very large number of network topologies, As the bandwidth on the link connecting the two switches can also be configured, the cluster can actually act as two separate clusters connected via one link. | ||

| + | |||

| + | The diagram shows a schematic of the cluster. | ||

| + | |||

| + | === Detailed Cluster Specification === | ||

| + | * [[HCL Cluster Specifications]] | ||

| + | * [[Old HCL Cluster Specifications]] (pre May 2010) | ||

| + | |||

| + | === Documentation === | ||

| + | * [[media:PE750.tgz|Dell Poweredge 750 Documentation]] | ||

| + | * [[media:SC1425.tgz|Dell Poweredge SC1425 Documentation]] | ||

| + | * [[media:X306.pdf|IBM x-Series 306 Documentation]] | ||

| + | * [[media:E326.pdf|IBM e-Series 326 Documentation ]] | ||

| + | * [[media:Proliant100SeriesGuide.pdf|HP Proliant DL-140 G2 Documentation]] | ||

| + | * [[media:ProliantDL320G3Guide.pdf|HP Proliant DL-320 G3 Documentation]] | ||

| + | * [[media:Cisco3560Specs.pdf|Cisco Catalyst 3560 Specifications]] | ||

| + | * [[media:Cisco3560Guide.pdf|Cisco Catalyst 3560 User Guide]] | ||

| + | * [[HCL Cluster Network]] | ||

| + | |||

| + | == Cluster Administration == | ||

| + | |||

| + | If PBS jobs do not start after a reboot of heterogeneous.ucd.ie it may be necessary to manually start maui: | ||

| + | /usr/local/maui/sbin/maui | ||

| + | |||

| + | ===Useful Tools=== | ||

| + | <code>root</code> on <code>heterogeneous.ucd.ie</code> has a number of [http://expect.nist.gov/ Expect] scripts to automate administration on the cluster (in <code>/root/scripts</code>). <code>root_ssh</code> will automatically log into a host, provide the root password and either return a shell to the user or execute a command that is passed as a second argument. Command syntax is as follows: | ||

| + | |||

| + | <source lang="text"> | ||

| + | # root_ssh | ||

| + | usage: root_ssh [user@]<host> [command] | ||

| + | </source> | ||

| + | |||

| + | Example usage, to login and execute a command on each node in the cluster (note the file <code>/etc/dsh/machines.list</code> contains the hostnames of all compute nodes of the cluster): | ||

| + | # for i in `seq -w 1 16`; do root_ssh hcl$i ps ax \| grep pbs; done | ||

| + | |||

| + | The above is sequential. To run parallel jobs, for example: <code>apt-get update && apt-get -y upgrade</code>, try the following trick with [http://www.gnu.org/software/screen/ screen]: | ||

| + | # for i in `seq -w 1 16`; do screen -L -d -m root_ssh hcl$i apt-get update \&\& apt-get -y upgrade; done | ||

| + | You can check the screenlog.* files for errors and delete them when you are happy. Sometimes all logs are sent to screenlog.0, not sure why. | ||

| + | |||

| + | == Software packages available on HCL Cluster 2.0 == | ||

| + | |||

| + | Wit a fresh installation of operating systems on HCL Cluster the follow list of packages are avalible: | ||

* autoconf | * autoconf | ||

* automake | * automake | ||

| + | * gcc | ||

| + | * ctags | ||

| + | * cg-vg | ||

* fftw2 | * fftw2 | ||

* git | * git | ||

| Line 17: | Line 65: | ||

* subversion | * subversion | ||

* valgrind | * valgrind | ||

| − | * | + | * gsl-dev |

| + | * vim | ||

| + | * python | ||

| + | * mc | ||

| + | * openmpi-bin | ||

| + | * openmpi-dev | ||

| + | * evince | ||

* libboost-graph-dev | * libboost-graph-dev | ||

* libboost-serialization-dev | * libboost-serialization-dev | ||

| − | * | + | * libatlas-base-dev |

| − | + | * r-cran-strucchange | |

| − | * | ||

| − | |||

| − | |||

| − | |||

| − | |||

* graphviz | * graphviz | ||

* doxygen | * doxygen | ||

| + | * colorgcc | ||

| + | |||

| + | [[HCL_cluster/hcl_node_install_configuration_log|new hcl node install & configuration log]] | ||

| + | |||

| + | [[HCL_cluster/heterogeneous.ucd.ie_install_log|new heterogeneous.ucd.ie install log]] | ||

| + | |||

| + | ===APT=== | ||

| + | To do unattended updates on cluster machines you need to specify some environment variables and switches to apt-get: | ||

| + | |||

| + | export DEBIAN_FRONTEND=noninteractive apt-get -q -y upgrade | ||

| + | |||

| + | NOTE: on hcl01 and hcl02 any updates to grub will force a prompt, despite the switches above. This happens because there are two disks on these machines and grub asks which it should install itself on. | ||

| + | |||

| + | == Access and Security == | ||

| + | All access and security for the cluster is handled by the gateway machine (heterogeneous.ucd.ie). This machine is not considered a compute node and should not be used as such. The only new incoming connections allowed are ssh, other incoming packets such as http that are responding to requests from inside the cluster (established or related) are also allowed. Incoming ssh packets are only accepted if they are originating from designated IP addresses. These IP's must be registered ucd IP's. csserver.ucd.ie is allowed, as is hclgate.ucd.ie, on which all users have accounts. Thus to gain access to the cluster you can ssh from csserver, hclgate or other allowed machines to heterogeneous. From there you can ssh to any of the nodes (hcl01-hcl16) that you are running a pbs job on. | ||

| + | |||

| + | Access from outside the UCD network is only allowed once you have gained entry to a server that allows outside connections (such as csserver.ucd.ie) | ||

| + | |||

| + | === Creating new user accounts === | ||

| + | As root on heterogeneous run: | ||

| + | adduser <username> | ||

| + | make -C /var/yp | ||

| + | |||

| + | === Access to the nodes is controlled by Torque PBS.=== | ||

| + | Use qsub to submit a job, -I is for an interactive session, walltime is time required. | ||

| + | qsub -I -l walltime=1:00 \\ Reserve 1 node for 1 hour | ||

| + | qsub -l nodes=hcl01+hcl07,walltime=1:00 myscript.sh | ||

| + | |||

| + | Example Script: | ||

| + | #!/bin/sh | ||

| + | #General Script | ||

| + | # | ||

| + | # | ||

| + | #These commands set up the Grid Environment for your job: | ||

| + | #PBS -N JOBNAME | ||

| + | #PBS -l walltime=48:00:00 | ||

| + | #PBS -l nodes=16 | ||

| + | #PBS -m abe | ||

| + | #PBS -k eo | ||

| + | #PBS -V | ||

| + | echo foo | ||

| + | |||

| + | So see the queue | ||

| + | qstat -n | ||

| + | showq | ||

| + | |||

| + | To remove your job | ||

| + | qdel JOBNUM | ||

| + | |||

| + | More info: [http://www.clusterresources.com/products/torque/docs/] | ||

| + | |||

| + | == Some networking issues on HCL cluster (unsolved) == | ||

| + | |||

| + | "/sbin/route" should give: | ||

| + | |||

| + | Kernel IP routing table | ||

| + | Destination Gateway Genmask Flags Metric Ref Use Iface | ||

| + | 239.2.11.72 * 255.255.255.255 UH 0 0 0 eth0 | ||

| + | heterogeneous.u * 255.255.255.255 UH 0 0 0 eth0 | ||

| + | 192.168.21.0 * 255.255.255.0 U 0 0 0 eth1 | ||

| + | 192.168.20.0 * 255.255.255.0 U 0 0 0 eth0 | ||

| + | 192.168.20.0 * 255.255.254.0 U 0 0 0 eth0 | ||

| + | 192.168.20.0 * 255.255.254.0 U 0 0 0 eth1 | ||

| + | default heterogeneous.u 0.0.0.0 UG 0 0 0 eth0 | ||

| + | |||

| + | |||

| + | For reasons unclear, sometimes many machines miss the entry: | ||

| + | |||

| + | 192.168.21.0 * 255.255.255.0 U 0 0 0 eth1 | ||

| + | |||

| + | For Open MPI, this leads to inability to do a system sockets "connect" call to any 192.*.21.* address (hangup). | ||

| + | For this case, you can | ||

| + | |||

| + | * switch off eth1 (see also [http://hcl.ucd.ie/wiki/index.php/OpenMPI] ): | ||

| + | |||

| + | mpirun --mca btl_tcp_if_exclude lo,eth1 ... | ||

| + | |||

| + | or | ||

| − | + | * you can restore the above table on all nodes by running "sh /etc/network/if-up.d/00routes" as root | |

| − | + | ||

| − | + | It is not yet clear why without this entry the connection to the "21" addresses can't be connected. We expect that in this case following rule should be matched (because of the mask): | |

| − | + | 192.168.20.0 * 255.255.254.0 U 0 0 0 eth0 | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | The packets leave over the eth0 network interface then and should go over switch1 to switch2 and eth1 interface of the corresponding node | |

| − | |||

| − | + | * If one attempts a ping from one node A, via its eth0 interface, to the address of another node's (B) eth1 interface, the following is observed: | |

| − | If | + | ** outgoing ping packets appear only on the eth0 interface of the first node A. |

| + | ** incoming ping packets appear only on eth1 interface of the second node B. | ||

| + | ** outgoing ping response packets appear on the eth0 interface of the second node B, never on the eth1 interface despite pinging the eth1 address specifically. | ||

| + | What explains this? With the routing tables as they are above, or in the damaged case, the ping may arrive to the correct interface, but the response from B is routed to A-eth0 via B-eth0. Further, after a number of ping packets have been sent in sequence (50 to 100), pings from A, though the -i eth0 switch is specified, begin to appear on both A-eth0 and A-eth1. This behaviour is unexpected, but does not effect the return path of the ping response packet. | ||

| − | |||

| − | + | In order to get a symmetric behaviour, where a packet leaves A-eth0, travels via the switch bridge to B-eth1 and returns back from B-eth1 to A-eth0, one must ensure the routing table of B contains no eth0 entries. | |

| − | + | == Paging and the OOM-Killer == | |

| + | Please read [[Virtual Memory Overcommit]] page for details. For reasons given overcommit has been disabled on the cluster. | ||

| − | + | cat /proc/sys/vm/overcommit_memory | |

| + | 2 | ||

| + | cat /proc/sys/vm/overcommit_ratio | ||

| + | 100 | ||

| − | + | To restore to default overcommit | |

| + | # echo 0 > /proc/sys/vm/overcommit_memory | ||

| + | # echo 50 > /proc/sys/vm/overcommit_ratio | ||

| − | + | == Manually Limit the Memory on the OS level == | |

| − | + | Memory can be manually limited in the grub. This should be reset when you are finished. | |

| − | + | If you are doing memory exaustive experiments test check this has not being adjusted by someone else. | |

| − | + | See [[Memory size, overcommit, limit]] for more detail. | |

| − | |||

| − | |||

Latest revision as of 13:53, 9 September 2013

Contents

General Information

The hcl cluster is heterogeneous in computing hardware & network ability.

Nodes are from Dell, IBM, and HP, with Celeron, Pentium 4, Xeon, and AMD processors ranging in speeds from 1.8 to 3.6Ghz. Accordingly architectures and parameters such as Front Side Bus, Cache, and Main Memory all vary.

Operating System used is Debian “squeeze” with Linux kernel 2.6.32.

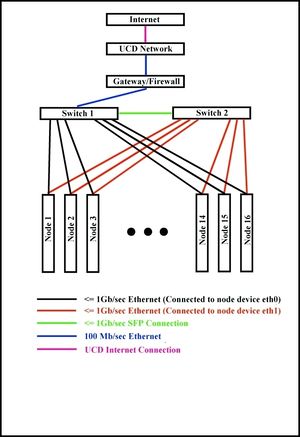

The network hardware consists of two Cisco 24+4 port Gigabit switches. Each node has two Gigabit ethernet ports - each eth0 is connected to the first switch, and each eth1 is connected to the second switch. The switches are also connected to each other. The bandwidth of each port can be configured to meet any value between 8Kb/s and 1Gb/s. This allows testing on a very large number of network topologies, As the bandwidth on the link connecting the two switches can also be configured, the cluster can actually act as two separate clusters connected via one link.

The diagram shows a schematic of the cluster.

Detailed Cluster Specification

- HCL Cluster Specifications

- Old HCL Cluster Specifications (pre May 2010)

Documentation

- Dell Poweredge 750 Documentation

- Dell Poweredge SC1425 Documentation

- IBM x-Series 306 Documentation

- IBM e-Series 326 Documentation

- HP Proliant DL-140 G2 Documentation

- HP Proliant DL-320 G3 Documentation

- Cisco Catalyst 3560 Specifications

- Cisco Catalyst 3560 User Guide

- HCL Cluster Network

Cluster Administration

If PBS jobs do not start after a reboot of heterogeneous.ucd.ie it may be necessary to manually start maui:

/usr/local/maui/sbin/maui

Useful Tools

root on heterogeneous.ucd.ie has a number of Expect scripts to automate administration on the cluster (in /root/scripts). root_ssh will automatically log into a host, provide the root password and either return a shell to the user or execute a command that is passed as a second argument. Command syntax is as follows:

# root_ssh

usage: root_ssh [user@]<host> [command]

Example usage, to login and execute a command on each node in the cluster (note the file /etc/dsh/machines.list contains the hostnames of all compute nodes of the cluster):

# for i in `seq -w 1 16`; do root_ssh hcl$i ps ax \| grep pbs; done

The above is sequential. To run parallel jobs, for example: apt-get update && apt-get -y upgrade, try the following trick with screen:

# for i in `seq -w 1 16`; do screen -L -d -m root_ssh hcl$i apt-get update \&\& apt-get -y upgrade; done

You can check the screenlog.* files for errors and delete them when you are happy. Sometimes all logs are sent to screenlog.0, not sure why.

Software packages available on HCL Cluster 2.0

Wit a fresh installation of operating systems on HCL Cluster the follow list of packages are avalible:

- autoconf

- automake

- gcc

- ctags

- cg-vg

- fftw2

- git

- gfortran

- gnuplot

- libtool

- netperf

- octave3.2

- qhull

- subversion

- valgrind

- gsl-dev

- vim

- python

- mc

- openmpi-bin

- openmpi-dev

- evince

- libboost-graph-dev

- libboost-serialization-dev

- libatlas-base-dev

- r-cran-strucchange

- graphviz

- doxygen

- colorgcc

new hcl node install & configuration log

new heterogeneous.ucd.ie install log

APT

To do unattended updates on cluster machines you need to specify some environment variables and switches to apt-get:

export DEBIAN_FRONTEND=noninteractive apt-get -q -y upgrade

NOTE: on hcl01 and hcl02 any updates to grub will force a prompt, despite the switches above. This happens because there are two disks on these machines and grub asks which it should install itself on.

Access and Security

All access and security for the cluster is handled by the gateway machine (heterogeneous.ucd.ie). This machine is not considered a compute node and should not be used as such. The only new incoming connections allowed are ssh, other incoming packets such as http that are responding to requests from inside the cluster (established or related) are also allowed. Incoming ssh packets are only accepted if they are originating from designated IP addresses. These IP's must be registered ucd IP's. csserver.ucd.ie is allowed, as is hclgate.ucd.ie, on which all users have accounts. Thus to gain access to the cluster you can ssh from csserver, hclgate or other allowed machines to heterogeneous. From there you can ssh to any of the nodes (hcl01-hcl16) that you are running a pbs job on.

Access from outside the UCD network is only allowed once you have gained entry to a server that allows outside connections (such as csserver.ucd.ie)

Creating new user accounts

As root on heterogeneous run:

adduser <username> make -C /var/yp

Access to the nodes is controlled by Torque PBS.

Use qsub to submit a job, -I is for an interactive session, walltime is time required.

qsub -I -l walltime=1:00 \\ Reserve 1 node for 1 hour qsub -l nodes=hcl01+hcl07,walltime=1:00 myscript.sh

Example Script:

#!/bin/sh #General Script # # #These commands set up the Grid Environment for your job: #PBS -N JOBNAME #PBS -l walltime=48:00:00 #PBS -l nodes=16 #PBS -m abe #PBS -k eo #PBS -V echo foo

So see the queue

qstat -n showq

To remove your job

qdel JOBNUM

More info: [1]

Some networking issues on HCL cluster (unsolved)

"/sbin/route" should give:

Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 239.2.11.72 * 255.255.255.255 UH 0 0 0 eth0 heterogeneous.u * 255.255.255.255 UH 0 0 0 eth0 192.168.21.0 * 255.255.255.0 U 0 0 0 eth1 192.168.20.0 * 255.255.255.0 U 0 0 0 eth0 192.168.20.0 * 255.255.254.0 U 0 0 0 eth0 192.168.20.0 * 255.255.254.0 U 0 0 0 eth1 default heterogeneous.u 0.0.0.0 UG 0 0 0 eth0

For reasons unclear, sometimes many machines miss the entry:

192.168.21.0 * 255.255.255.0 U 0 0 0 eth1

For Open MPI, this leads to inability to do a system sockets "connect" call to any 192.*.21.* address (hangup). For this case, you can

- switch off eth1 (see also [2] ):

mpirun --mca btl_tcp_if_exclude lo,eth1 ...

or

- you can restore the above table on all nodes by running "sh /etc/network/if-up.d/00routes" as root

It is not yet clear why without this entry the connection to the "21" addresses can't be connected. We expect that in this case following rule should be matched (because of the mask):

192.168.20.0 * 255.255.254.0 U 0 0 0 eth0

The packets leave over the eth0 network interface then and should go over switch1 to switch2 and eth1 interface of the corresponding node

- If one attempts a ping from one node A, via its eth0 interface, to the address of another node's (B) eth1 interface, the following is observed:

- outgoing ping packets appear only on the eth0 interface of the first node A.

- incoming ping packets appear only on eth1 interface of the second node B.

- outgoing ping response packets appear on the eth0 interface of the second node B, never on the eth1 interface despite pinging the eth1 address specifically.

What explains this? With the routing tables as they are above, or in the damaged case, the ping may arrive to the correct interface, but the response from B is routed to A-eth0 via B-eth0. Further, after a number of ping packets have been sent in sequence (50 to 100), pings from A, though the -i eth0 switch is specified, begin to appear on both A-eth0 and A-eth1. This behaviour is unexpected, but does not effect the return path of the ping response packet.

In order to get a symmetric behaviour, where a packet leaves A-eth0, travels via the switch bridge to B-eth1 and returns back from B-eth1 to A-eth0, one must ensure the routing table of B contains no eth0 entries.

Paging and the OOM-Killer

Please read Virtual Memory Overcommit page for details. For reasons given overcommit has been disabled on the cluster.

cat /proc/sys/vm/overcommit_memory 2 cat /proc/sys/vm/overcommit_ratio 100

To restore to default overcommit

# echo 0 > /proc/sys/vm/overcommit_memory # echo 50 > /proc/sys/vm/overcommit_ratio

Manually Limit the Memory on the OS level

Memory can be manually limited in the grub. This should be reset when you are finished. If you are doing memory exaustive experiments test check this has not being adjusted by someone else. See Memory size, overcommit, limit for more detail.